Elon Musk famously compared the dangers of Artificial Intelligence (AI) to that posed by nuclear; an indication, he said, of the urgent need for regulation of the field.

At present, the difficulty of regulating AI as a whole may largely rest in the lack of any one single definition of what artificial intelligence is, which encompasses a wide range of technologies and hence, applications. Regulating AI would therefore require, at the outset, breaking legal definitions down to specific technologies, fields of application, and potential outcomes.

Expanding from this is the issue of how exactly to regulate AI. Elon Musk’s proposal for a public body with the necessary insight and oversight could be a starting point to build upon. More specifically, industry-specific AI regulation, or the regulation of AI outputs, rather than the AI itself, could be implemented. On a global level, the European Union (EU) is starting to regulate AI use in financial markets by introducing The Markets in Financial Instruments Directive 2, which sets out strict guidelines governing the timestamping and recordkeeping of algorithmic trading events within 100 microseconds of accuracy, measured against Coordinated Universal Time (UTC).

What current regulation frameworks cannot yet contemplate is what kinds of new technologies will arise in the coming days, and whether new applications can also arise out of older, more established technologies. Perhaps the next measure would be to revamp the way regulation, too, is done.

Source: Google

AI in the driver’s seat

Machine learning, which forms the bedrock of AI, itself can be divided into many applications, including text and speech analysis, image processing and tagging, 3D environment processing, and data mining, to name a few. Yet the fact that so many of these applications apply decision-making in an increasingly abstract space, based on machine learning principles, makes it difficult to pinpoint fault in the event that any damage should result. Questions arise, such as: at what point did the machine become aware of this event sufficient enough to have made a timely response?

It is, for instance, possible to attribute fault to humans in relation to erroneous programming, or careless operation, but current legal mechanisms fail where there is no possibility of attributing damage to human error.

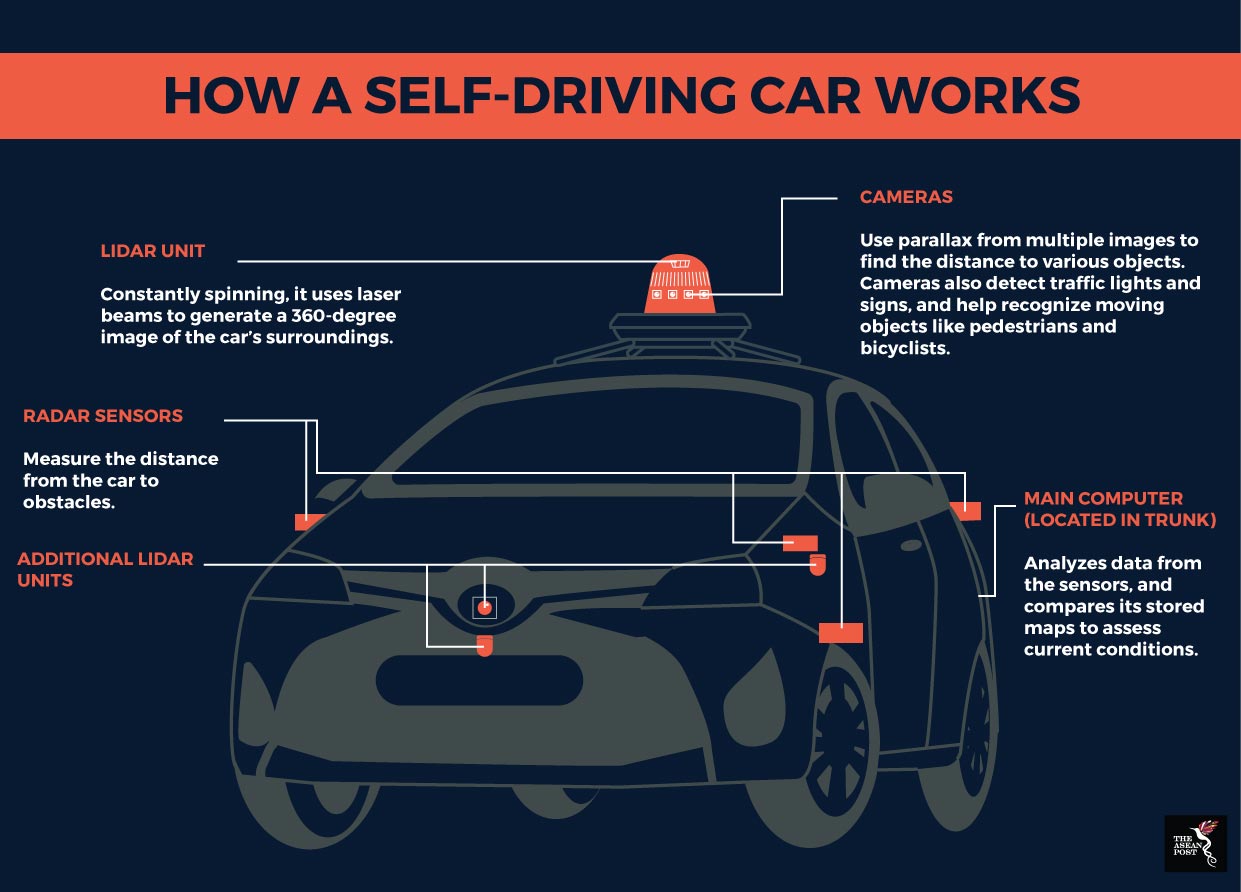

Self-driving technology is one such example of AI that is developing in this regulatory grey area. As a whole, self-driving tech utilises Lidar (light detection and ranging) sensors, radar sensors, cameras and a computer unit to help it detect the world around it, and to respond accordingly. Thus, once graduating current testing stages, it is essentially likely to operate without much human intervention at all.

Even at this stage, there are problems attributing fault to accidents that happen. A case in point is the recent collision involving a Uber self-driving car, a lady who was fatally killed by the car, and the driver who was behind the wheel of the driverless car. Where normal car accidents would normally raise the issue of negligence, self-driving car accidents would likely revolve around whether the product had an inherent design defect.

Without any regulations in place, AI regulation would ultimately only be reactionary, at best and that may not be good enough, given the limitations of that approach. AI regulation is still more important in sectors like finance and defence, where issues of national security and the national economy could be at stake.

In Southeast Asia, Singapore currently leads the way with its Research Programme on the Governance of Artificial Intelligence and Data Use. The program will target to achieve three objectives, namely 1) to promote cutting-edge thinking and practices, 2) inform AI and data policy regulation, and 3) establish Singapore as a global thought leader in AI and data policies regulations.

It has also shown foresight in navigating the potential issues arising from introducing self-driving vehicles to Singapore roads. Amendments to the Road Traffic Act were made last year to recognise motor vehicles driven by a human driver. The legislation is nevertheless flexible enough to accommodate the developing stages of the technology. This, then, is the balance all governments need to keep: carving out just enough room for innovation, while keeping developers in check.

According to Ng Chee Meng, Second Minister for Transport in Singapore, it will still be another 10 to 15 years before autonomous vehicle technology can be deployed widely in Singapore. This, also, is what the timeframe for long-term planning for regulating AI looks like.

As McKinsey & Company observed in its 2017 report, ‘Artificial Intelligence & Southeast Asia’s Future’, AI adoption tends to correlate with the degree of a nation’s digitisation, which is still developing in Southeast Asia. Before focused AI regulation can be properly established in Southeast Asia, issues like data privacy and security need to be ironed out first. Creating an open but secure data environment is a foundational step.

On a global level, it is likely that the earliest AI adopters will have a headstart with regards to formulating and structuring AI regulatory frameworks. These will then set the bar for other countries to follow suit. The issue has never been about whether there will be AI regulation; but rather when, and how it should be implemented.