You get an anonymous message containing a video attachment on your smartphone. You download the video and watch it only to shockingly find that someone you care deeply about - whether it be a family member or your spouse - is seen performing an unspeakable act. You approach your family member or spouse about the video and he or she tells you that it’s fake. After seeing the video with your own eyes, do you believe them? Now, imagine it’s someone you have no personal affection for.

Deepfake, a portmanteau of "deep learning" and "fake", is an artificial intelligence (AI)-based human image synthesis technique. It is used to combine and superimpose existing images and videos onto source images or videos using a machine learning technique called a generative adversarial network (GAN). The combination of the existing and source videos results in a fake video that shows a person or persons performing an action at an event that never actually occurred.

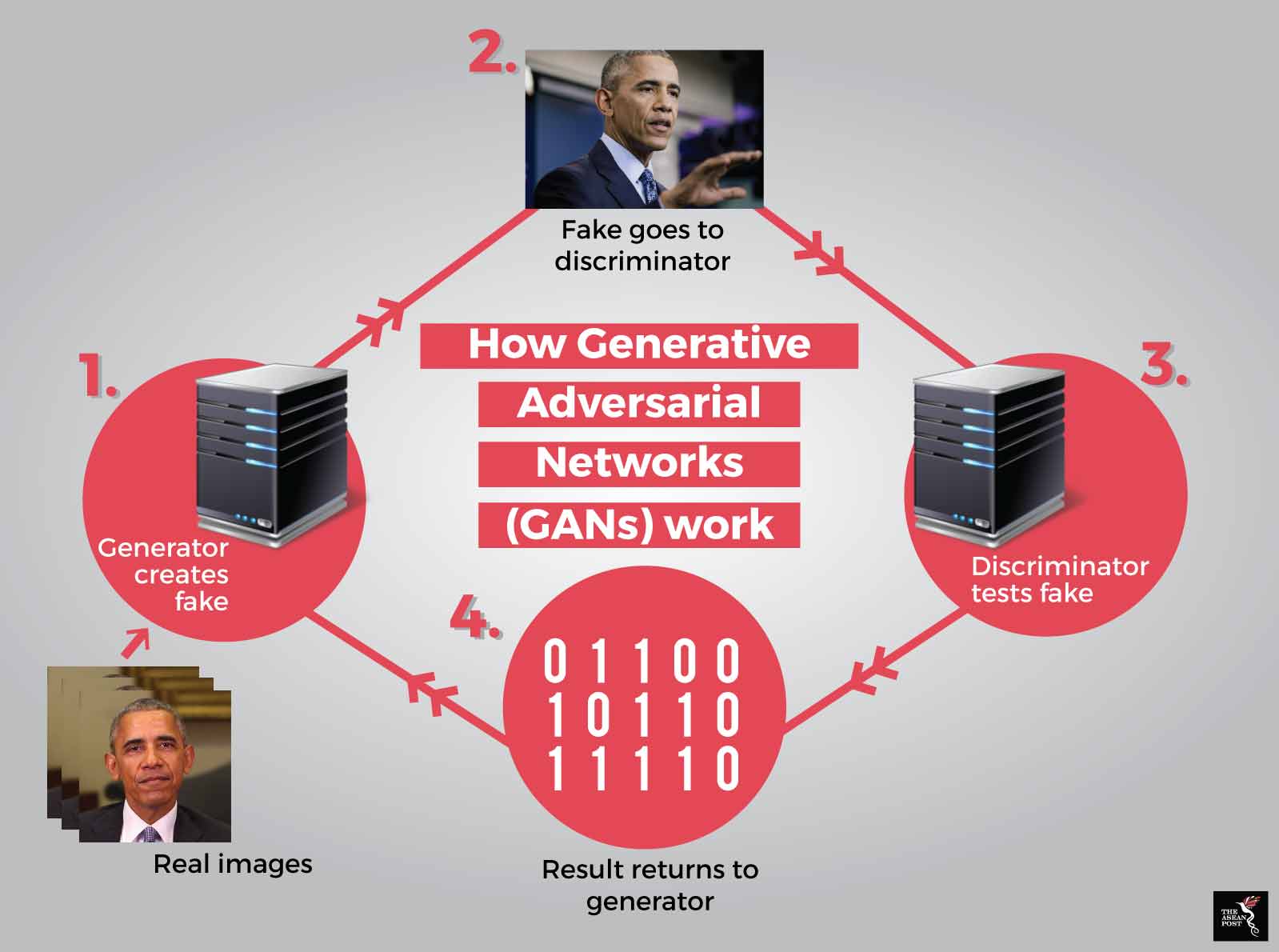

GANs – in their most basic sense - are where two different networks contest each other. One network generates the fakes (generative) and the other evaluates how real they look (discriminative). The generative network’s objective is to fool the discriminative network until it can no longer distinguish between what is authentic and what is fake.

As time progresses, member states within the technology-welcoming ASEAN bloc are becoming more technologically developed. Singapore leads the pack, Thailand has its Thailand 4.0 initiative, and frontier economies like Cambodia too are making technological advances. All this, of course, is essential if ASEAN is to be relevant to the rest of the world as the Fourth Industrial Revolution (Industry 4.0) starts to take-off.

Deepfakes, however, represent the shadier (and potentially damaging) flipside to technology.

A brief history

Face swapping has been done in movies for years, but in order to do it, skilled video editors and computer-generated imagery (CGI) experts needed to spend several hours to achieve only decent results.

Then, in December 2017, a user named “DeepFakes” posted realistic looking explicit videos of famous celebrities on the discussion website Reddit. He generated these fake videos by superimposing celebrities’ faces onto adult movie actors.

Today, new breakthroughs in technology allows anyone with deep learning techniques and a powerful graphics processing unit (GPU) to create fake videos far superior to the face swapping techniques seen in old films. No video editing skills are needed as the entire process is handled automatically by an algorithm.

The other thing that a person needs in order to start creating fake videos are sample images of person A and person B. Now, think about how many of us have our pictures posted all over the internet?

Source: Symantec Corporation

Source: Symantec Corporation

Swiss German-language daily, Aargauer Zeitung, once said that the manipulation of images and videos using AI could potentially become a dangerous mass phenomenon.

Revenge porn

While the falsification of images and videos in itself has been done even before the emergence of video editing software and image editing programs, in the case of deepfakes, it is the realism which is new and also so terrifying.

The fact that the improvement in technology first emerged with explicit videos of famous celebrities has put those who are aware of the technology and its implications on high alert. This, coupled with the ease of creating deepfakes, has given rise to the fear of targeted hoaxes and revenge pornography.

Revenge pornography, or revenge porn, is the distribution of sexually explicit images or videos of individuals without their permission. The sexually explicit images or video could be made by a partner with or without the prior knowledge and consent of the subject. The possession of the material could also be used by the perpetrators to blackmail their subjects.

In the wake of civil lawsuits and the increasing numbers of reported incidents, legislation has been passed in a number of countries and jurisdictions to outlaw this practice, though approaches have varied. The practice has also been described as a form of psychological abuse and domestic violence, as well as a form of sexual abuse.

Political agendas

But revenge pornography is just one of the many problems that deepfakes may pose. It could also be used to further political agendas. One of the more famous deepfakes is the one of former United States (US) president Barrack Obama seemingly saying things he never did. The video was actually a public service announcement (PSA) on fake news.

Political agendas are another worry brought on by the inevitable advances in deepfake technology, but with fake videos, the possibilities are truly limitless. And while those who have seen deepfakes know that there are still little quirks that could give away whether a video is authentic or not, it’s only a matter of time before the technology becomes so advanced that it will be almost impossible to tell the difference between a deepfake and reality. And if you think we’re faking that, go ahead and search online for the Jennifer Lawrence – Steve Buscemi deepfake mashup. You may not believe your eyes!

Related articles:

Cambodia’s cyberbullied children